Revolutionizing

AI for All

Falcon 3 now offers new multimodal capabilities, processing not only text, but also images, and for the first time in the Falcon series, video and audio. These enhancements open exciting new possibilities for media analysis and interactive user experiences.

Falcon 3 has been meticulously designed to address this gap with multimodal capabilities.The addition of multimodal capabilities – image, video, and audio – further elevates the Falcon 3 family, pushing the limits of open-source AI with unprecedented performance and usability. As an opensource large language model (LLM), Falcon 3 is designed to democratize advanced AI by combining outstanding performance with the ability to run on lightweight devices, including laptops.

Released under TII’s Falcon License 2.0, Falcon 3 is a pioneering step toward making advanced AI tools available to all.

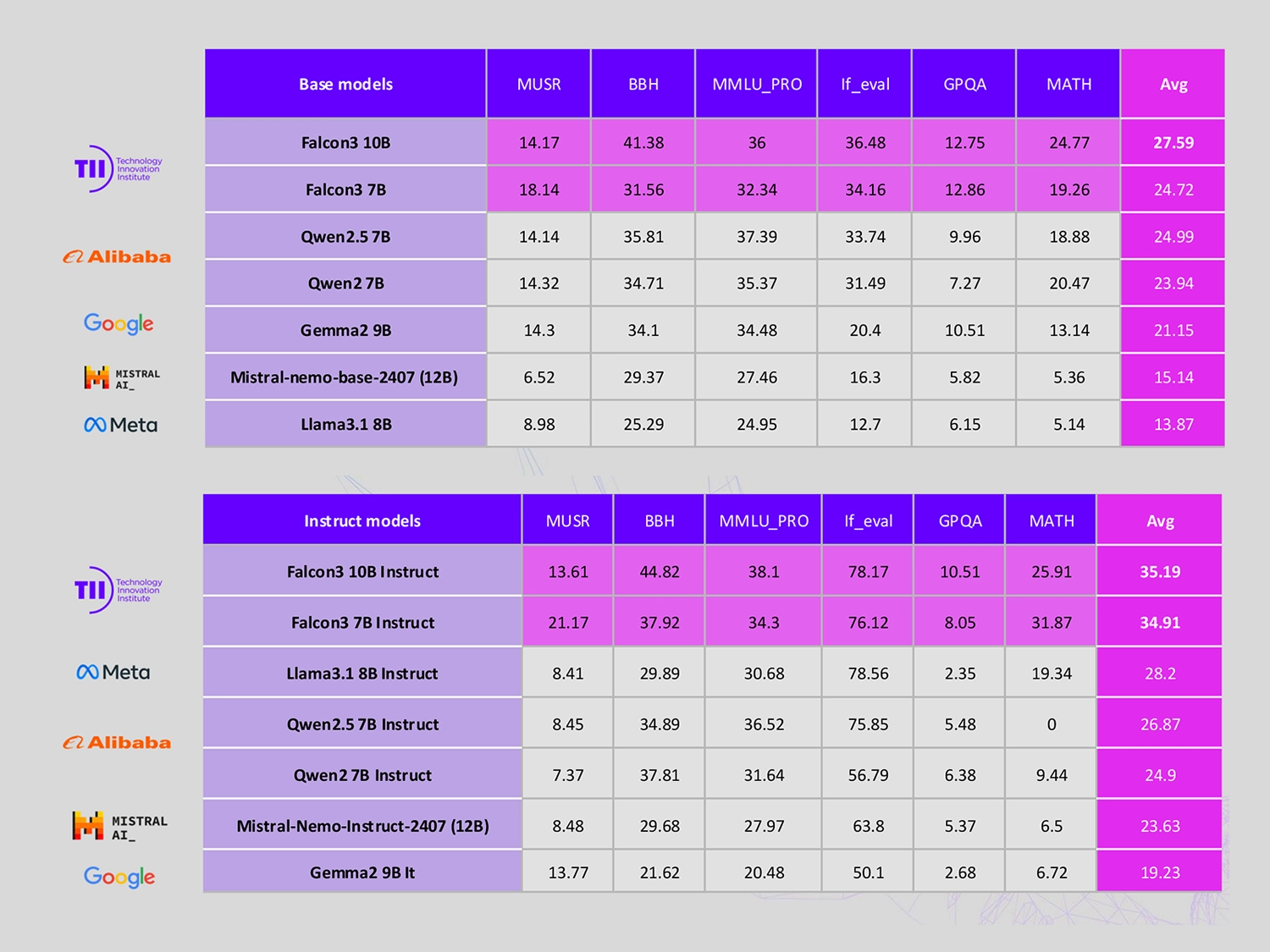

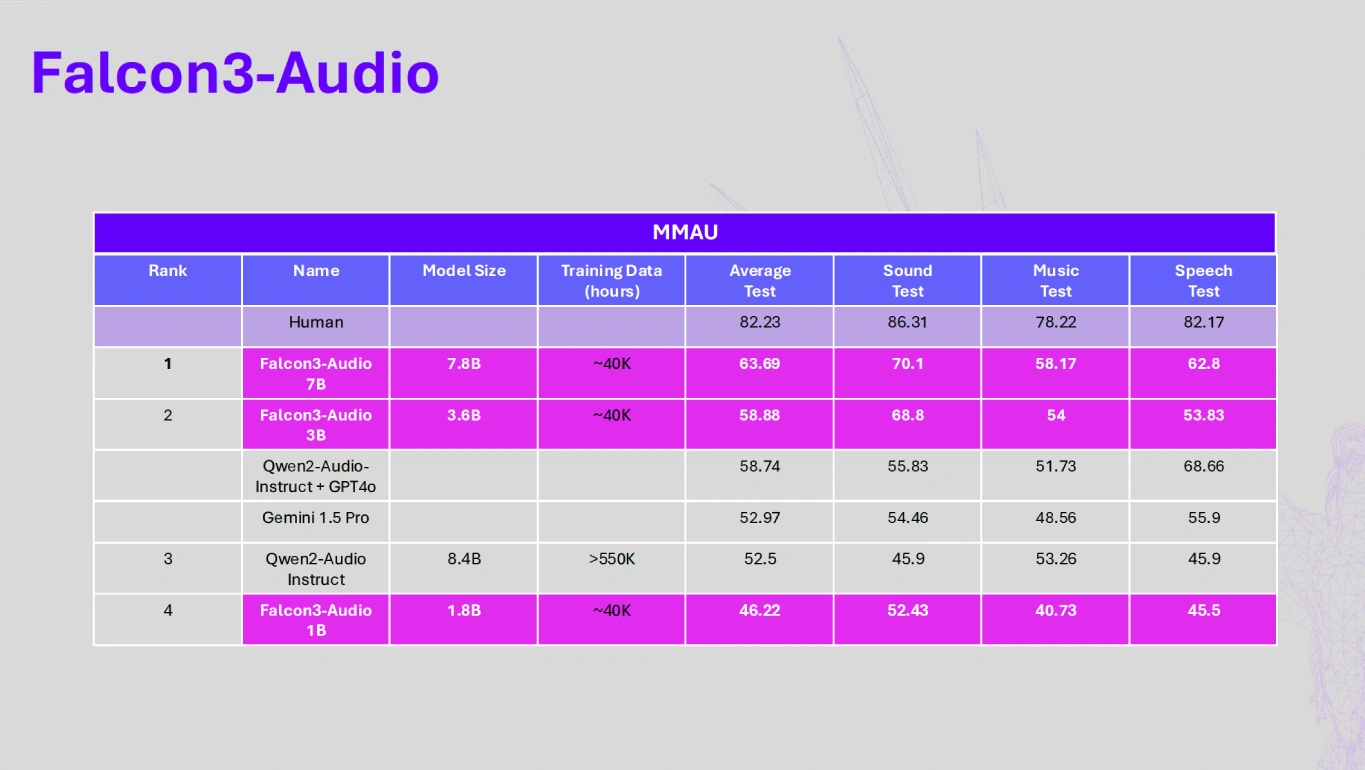

Benchmark

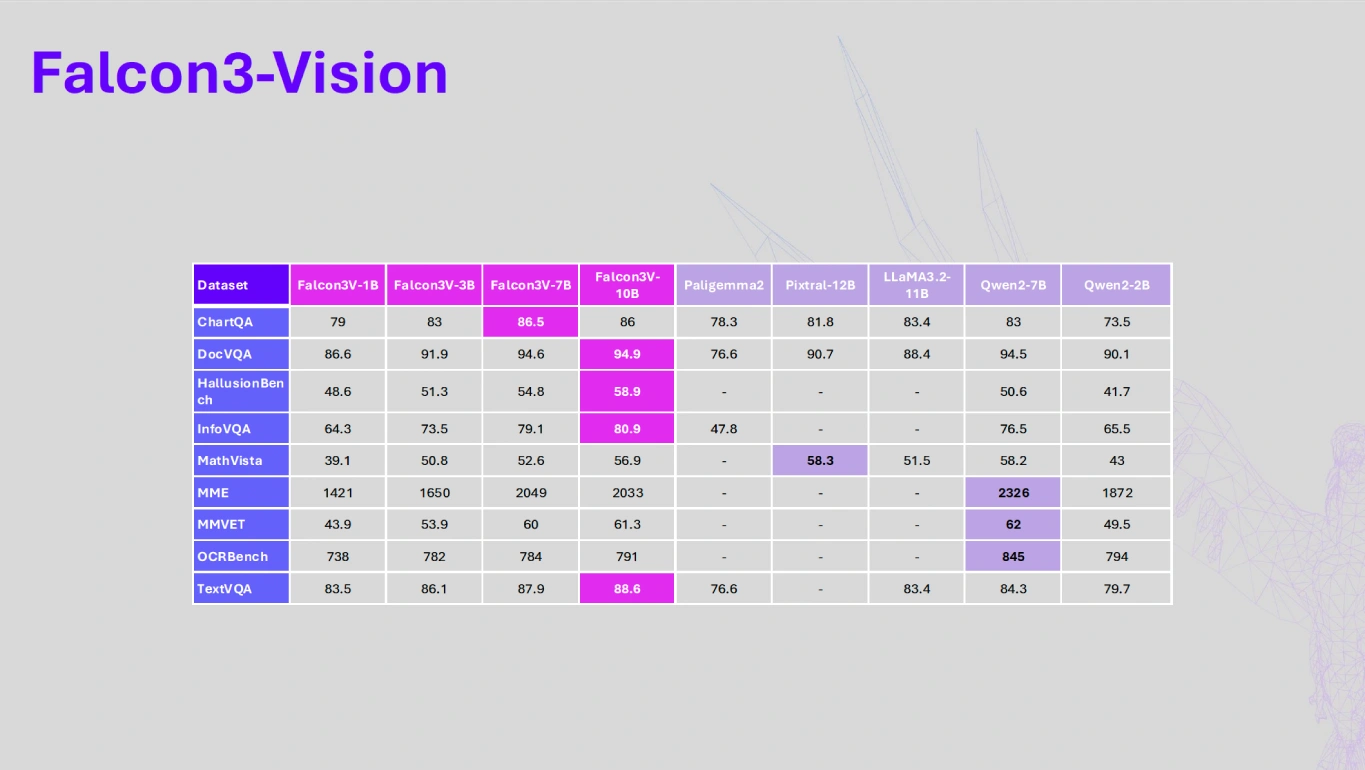

Falcon3 - Vision

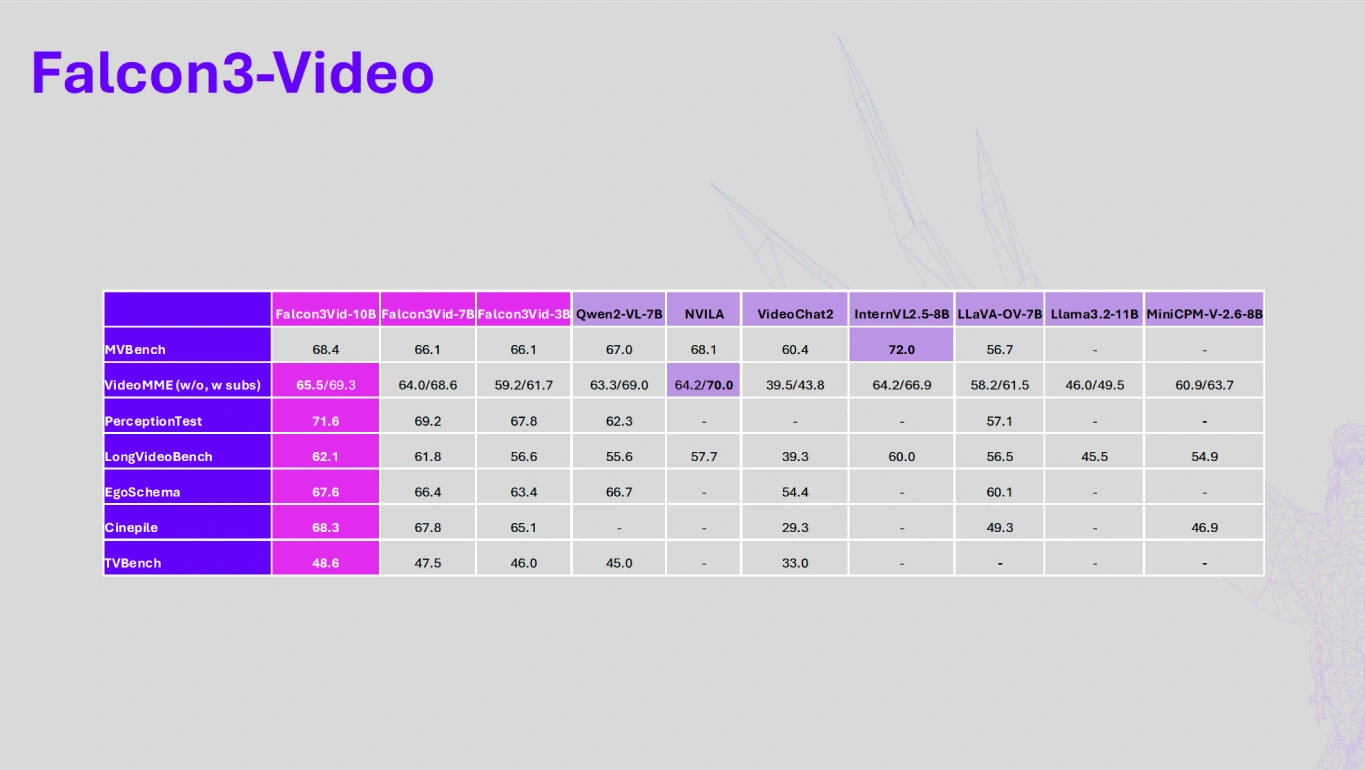

Falcon3 - Video

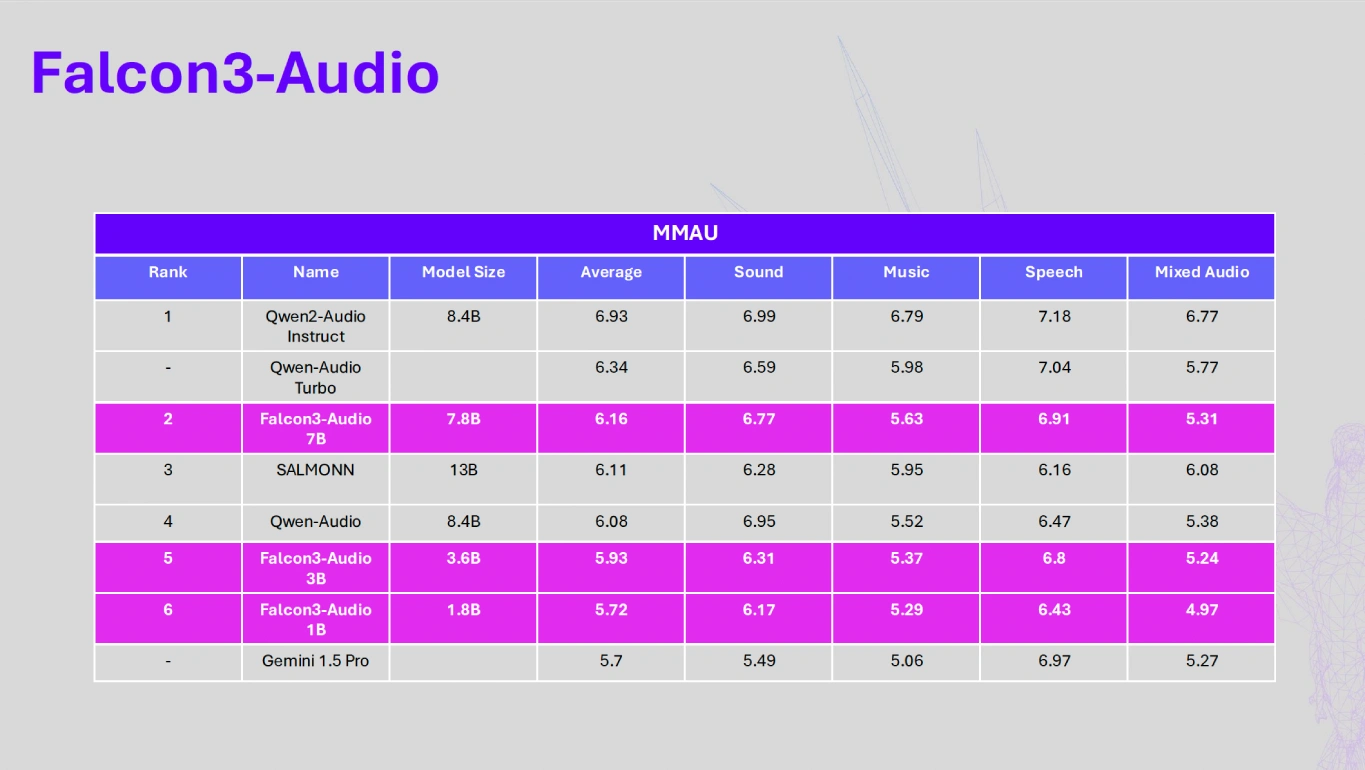

Falcon3 - Audio

New multimodal functionalities: Falcon 3 Vision, Video, and Audio

Falcon 3’s architecture is based on a decoder-only design using flash attention 2 to grouped query attention. It integrates Grouped Query Attention (GQA) to share parameters, minimizing memory for Key-Value (KV) cache during inference, ensuring faster and more efficient operations.

With a tokenizer supporting a high vocabulary of 131K tokens—double that of Falcon 2—Falcon 3 offers superior compression and improved downstream performance, enhancing its ability to handle diverse tasks.

Trained natively with a 32K context size, Falcon 3 demonstrates exceptional long-context capabilities, delivering enhanced performance for extended input data compared to its predecessors.

Falcon 3 Vision, Video, and Audio all provide modality-to-text capabilities, enabling seamless eco-system integration and potential model cooperation. The multimodal version of Falcon 3 supports English for processing audio, video, and image data seamlessly.

Advanced AI for Everyone, Everywhere

Falcon’s quantized versions, such as GGUF, AWQ, and GPTQ (in int4, int8, and 1.58 Bitnet), make it highly efficient, even for resource-constrained environments. Optimized for lightweight systems, Falcon 3 is a game-changer. The latest update to the Falcon family comprises four multimodal models—1B, 3B, 7B, and 10B— now featuring text, image, video, and audio analysis tailored for different needs. Falcon 3 models can be further customized through tools like vLLM, Llama.cpp, and MLX, ensuring seamless adoption for developers.

These innovations reflect our commitment to ensuring AI is accessible and efficient for a wide range of users.

Falcon 3 is versatile, designed for both general-purpose and specialized tasks, providing immense flexibility to users.

Its Base model is perfect for generative applications, while the Instruct model excels in conversational tasks like customer service or virtual assistants.

Falcon 3 is straight forward to implement, whether you’re a startup seeking to enhance user experience or a researcher exploring innovative AI applications. For organizations and individuals with limited computational resources, Falcon 3’s quantized versions offer rapid deployment and optimized efficiency without compromising performance.